There also is a lot of fear of AI taking over more and more types of jobs such as:

- Automated Investment companies like Wealthfront or Betterment.

- Robo-reporters covering some sports or stock-market news.

IBT recently had an article discussing a report put out by Stanford title Artificial Intelligence and Life in 2030 (it's a PDF). The report breaks out various trends related to AI such as:

- Large-scale machine learning

- Deep learning - (defined as convolutional neural network machine learning)

- Reinforcement learning - a field of machine learning where the machine "learns" from it's mistakes.

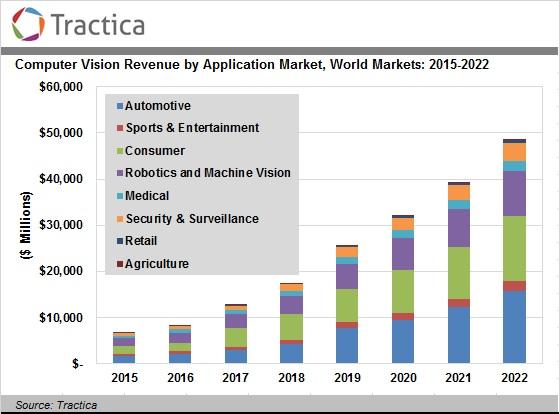

- Computer vision - a big user of deep learning

- Natural Language Processing - Siri, Alexa, Hey Google, etc.

The report then goes into some of the great strides in AI that have taken place over the last few years. For those interested in self-driving cars it had this summary:

During the first Defense Advanced Research Projects Agency (DARPA) “grand challenge” on autonomous driving in 2004, research teams failed to complete the challenge in a limited desert setting. But in eight short years, from 2004-2012, speedy and surprising progress occurred in both academia and industry. Advances in sensing technology and machine learning for perception tasks has sped progress and, as a result, Google’s autonomous vehicles and Tesla’s semi-autonomous cars are driving on city streets today. Google’s self-driving cars, which have logged more than 1,500,000 miles (300,000 miles without an accident), are completely autonomous—no human input needed. Tesla has widely released self-driving capability to existing cars with a software update.34 Their cars are semi-autonomous, with human drivers expected to stay engaged and take over if they detect a potential problem. It is not yet clear whether this semi-autonomous approach is sustainable, since as people become more confident in the cars’ capabilities, they are likely to pay less attention to the road, and become less reliable when they are most needed. The first traffic fatality involving an autonomous car, which occurred in June of 2016, brought this question into sharper focus.

It also talks about home-service robots such as vacuum cleaners and then gets into a current hotbed of machine learning - Health Care. Could AI do some of things that doctors do now?

Looking ahead to the next fifteen years, AI advances, if coupled with sufficient data and well-targeted systems, promise to change the cognitive tasks assigned to human clinicians. Physicians now routinely solicit verbal descriptions of symptoms from presenting patients and, in their heads, correlate patterns against the clinical presentation of known diseases. With automated assistance, the physician could instead supervise this process, applying her or his experience and intuition to guide the input process and to evaluate the output of the machine intelligence. The literal “hands-on” experience of the physician will remain critical. A significant challenge is to optimally integrate the human dimensions of care with automated reasoning processes.

So all of this is both fascinating and scary. I don't think anyone can completely understand the long-term impact of AI technologies. They types of tasks that we've been able to turn over to computers and other machines is, of course, staggering. Who knows what they will think up next. But, as anyone who has worked with computers knows, they don't think. Machine learning is incredible, but machines don't think or learn like humans do. They perform tasks, they mine data, they identify patterns, they monitor innumerable sensors and correlate data from them. But they're not intelligent.

The Stanford report takes an optimistic view of the future of AI. We're going to continue to try and find new uses of computers in our lives, it's happening whether we want it to or not. But I think we'll find there are many things, that they just can't do.